Why VCs should focus less on empirical data

In screening for the next investment, some VCs apply a negative selection process in which they exclude (or manage risk) companies with traits that they believe, based on their past experience or empirical data, will lead to an unsuccessful outcome. Some of the traits may include a company founded by a single founder, no industry experience, no previous founder experience, not in Silicon Valley…etc. While the use of such empirical data should be encouraged when applied in the positive selection process such as selecting companies with high revenue growth, VCs should be more careful when applying it for the sake of risk management because doing so will increase the risk of making Type I and Type II errors.

Skewed and asymmetric distribution: Risk of Type II error

VCs are well aware that the game they are playing is skewed and asymmetric in its outcome distribution. In other words, there are often a few mega-winners and the rest would breakeven at best. This is why the VCs are aiming for a large win in a potentially huge market so their 1~2 mega-wins can payoff in multiples of all the loses made in other investments. This is similar to how a public equity market’s return is distributed, another venue that bears similarity to VC industry in a fact that a large sum of capital is chasing after a few wins. As Charlie Munger famously said (quote below):

“If you took our top fifteen decisions out, we’d have a pretty average record”

- Charlie Munger

Naturally, these mega-wins are rare and this means the frequency of winning is inversely correlated with the size of a win. In other words, a mega-win doesn’t happen too often. Following this logic, a rare mega-win can’t be predicted based on an empirical data set and an atypical quality must play a role to produce such rare events.

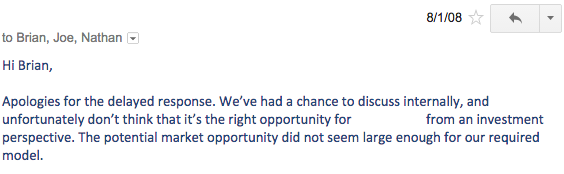

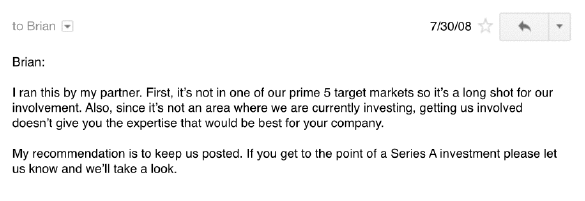

This is why some of the mega-wins we know today, such as Airbnb, had difficulty raising funds in their early days. Below are some rejection emails Brian Chesky disclosed on his Medium account and this shows how the VCs were applying negative selection process: no tech-talent, not a focus area…etc. While all are valid reasons, we can see how they were trying to fit the opportunity into a model built influenced by an empirical data.

Since Airbnb was at an early stage (raising $150,000 at $1.5M valuation), what would an alternative history would look like if the VCs focused more on the unique path that the founders had built? Could it have saved the VCs from making a Type II error? Barring the hindsight bias, what if the VCs did not apply the empirical model and instead had asked a question of “how is this team unique/different…?” or “how do they challenge our model/assumptions…?”. Could those questions have at least prompted the VCs to ask another layer of questions to learn more about the founding team versus a swift rejection?

As stated earlier, the rare events can’t be predicted precisely because nothing of such sort happened before. Hence, the VCs decision of rejecting Airbnb back in 2008 was probably logical and made sense given all the information they had at the point in time. In this regard, the best lesson VCs can take is to remain flexible and to limit the application of an empirical data in negative selection of their investment process.

Increasing number of startups: Risk of Type I error

Another reason use of the empirical data should be cautioned is because the size of data (number of startups found) is increasing. In contrary to a conventional belief that a large sample size increases reliability of data, a large sample size of data from a random, skewed and asymmetric environment may produce a spurious result. This is because a large sample size increases chance of a winner being produced purely by chance (a lucky winner) and it is very difficult to discern the true winner (a skilled winner) VS the lucky winner. Even if we can find the skilled winner, there is still another layer of task to isolate a true causation, which is very difficult because there are often causations and luck acting together (in other words a multivariate analysis is almost impossible).

In other words, as startup industry grows, the likelihood of VCs making a Type I error (false positive) increases by being overly reliant on empirical data.

Question the convention. Defy the herding effect.

In an environment where uniqueness and contrarian stance has a higher chance of yielding an outsized result, it is best to question the assumption, challenge the conventional wisdom, have courage to defy the herding effect and be able to use empirical results with a grain of a salt.

Being able to think for themselves is as important in VCs as it is for founders.